Search Engine Optimization

#3 Search Engines

Search Engines

Make sure your website can be found!

When a user is making a query using a search engine, the engine evaluates thousands of factors and scours through billions of pieces of content to identify the most relevant answer.

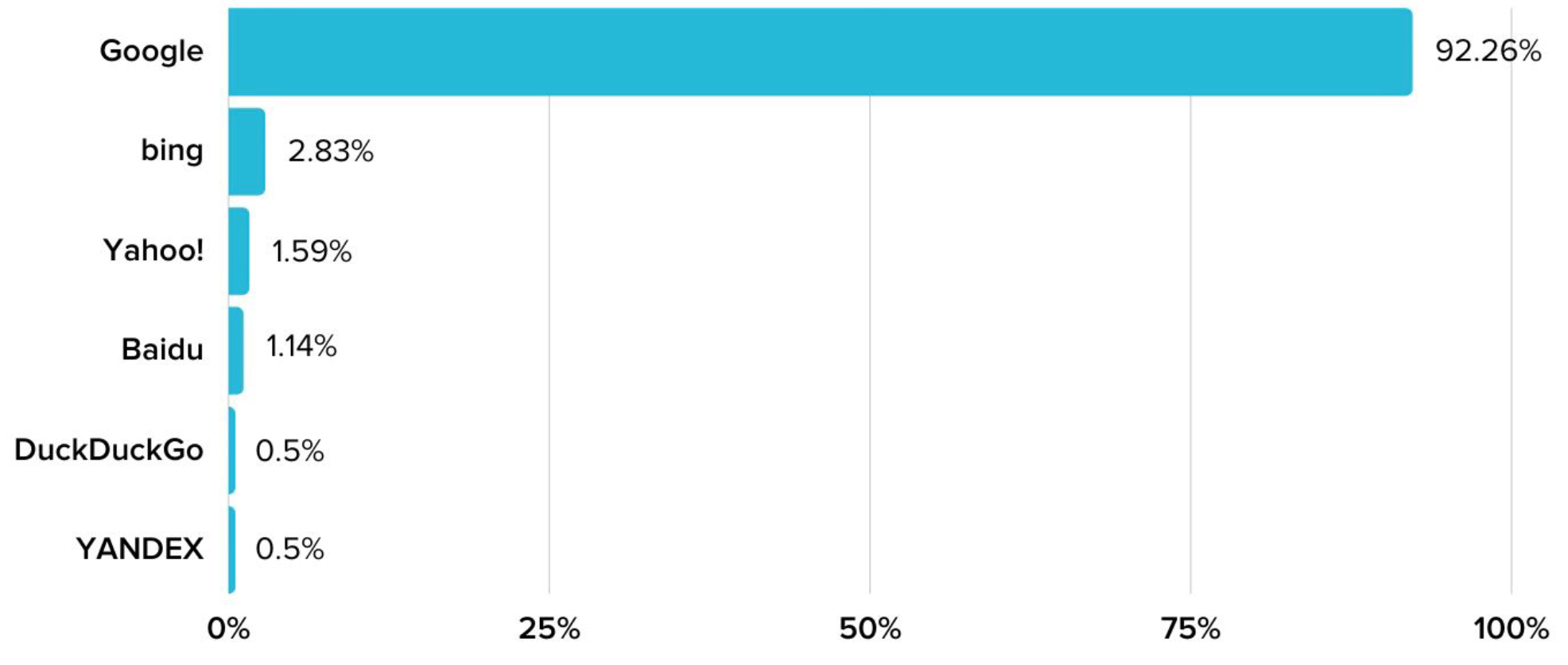

A search engine is an online tool that assists users in finding information on the internet. Google, which accounted for over 90% of all queries in 2021, is likely the only search engine you need to know. It is estimated that approximately 3.5 billion unique searches are conducted worldwide each day. Considering Google’s dominance, it is beneficial from an SEO perspective to understand Google’s search algorithms well.

Other search engines work similarly to Google’s principles. With Google’s dominance in mind, your website should be optimized for Google.

Search Engines are discovering, understanding, and organizing all available content on the Internet via a process known as “crawling and indexing.” The found content is then managed by how well it matches the query in a process called “ranking.” The ranking represents the most relevant results to the questions searchers are asking.

To show up in search results, the content on your website needs first to be visible to search engines. That is the essential piece of the SEO work. If your website cannot be found, your content on your website will not show up in the SERPs.

How do search engines work?

Search engines work through three main functions; crawling, indexing and ranking.

Search Engine crawling

Crawling is the process by which search engines continuously scan all web pages on the internet using small pieces of programs known as “crawlers” or “bots.” These programs follow hyperlinks and discover new or updated content.

Googlebot is the term used to describe Google’s web crawler, which comes in two forms: a desktop crawler that simulates a user on a desktop computer and a mobile crawler that simulates a user on a mobile device.

Googlebot retrieves several web pages and subsequently follows the links on those pages to locate new URLs. By traversing this link path, the crawler discovers new content and adds it to Google’s index, known as Caffeine. Caffeine is a comprehensive database of URLs that have been found, and these URLs are later retrieved when a user searches for information that matches the content on that particular URL.

Search engine index

After the website is scanned, the search engines organize its data in an index. They aim to evaluate and comprehend the pages, sort them into categories, and add them to an index. Essentially, the search engine index is an enormous collection of all the websites that have been crawled, whose sole objective is to comprehend them and make them accessible as search results.

Search engine ranking

When a search is performed, the search engines scan their index for highly pertinent content and then arrange it based on the search query. This process of organizing search results by relevance is known as ranking. Typically, the higher a website is ranked, the more relevant the search engine believes it to be for the query.

It is possible to prevent search engine crawlers from accessing parts or all of your website or to instruct search engines not to store specific pages in their index if necessary. To ensure that your content is discoverable by searchers, you must first ensure it is accessible to crawlers and can be indexed.

Search results are ordered from the most relevant to the least relevant content that can best address the searcher’s query. SEO rankings refer to a website’s placement on the search engine results page. Numerous ranking factors influence whether a webpage appears higher on the search engine results page, including the relevance of the content to the search term and the quality of backlinks pointing to the page.

Google algorithm

Once an Internet user submits a search query, the search engine looks into its index and pulls out the best results. The results list is a SERP (Search Engines Results Page).

Ranking factors

Google’s search algorithm refers to all the individual algorithms, machine learning systems and technologies that Google uses to rank websites.

In the ranking processes, the algorithms consider various factors, including:

- Meaning of the query

The webpage must be relevant to the search query.

- The relevance of pages

The webpage must be relevant to the search query.

- Quality of content

The search engine tries to pick the best results in terms of quality of content.

- Usability of pages

The pages should also be usable for the person searching.

- Context and settings

The user’s location, settings and history of searches are also considered in the process.

Google officially confirms several ranking factors, but many are still shrouded in speculation and theories. From a practical standpoint, it is critical to concentrate on the aspects that have a demonstrated impact on the ranking process.

It is not true that everything that people believe to be a ranking factor is utilized by search engines. Conversely, some confirmed ranking factors have only a negligible influence on ranking.

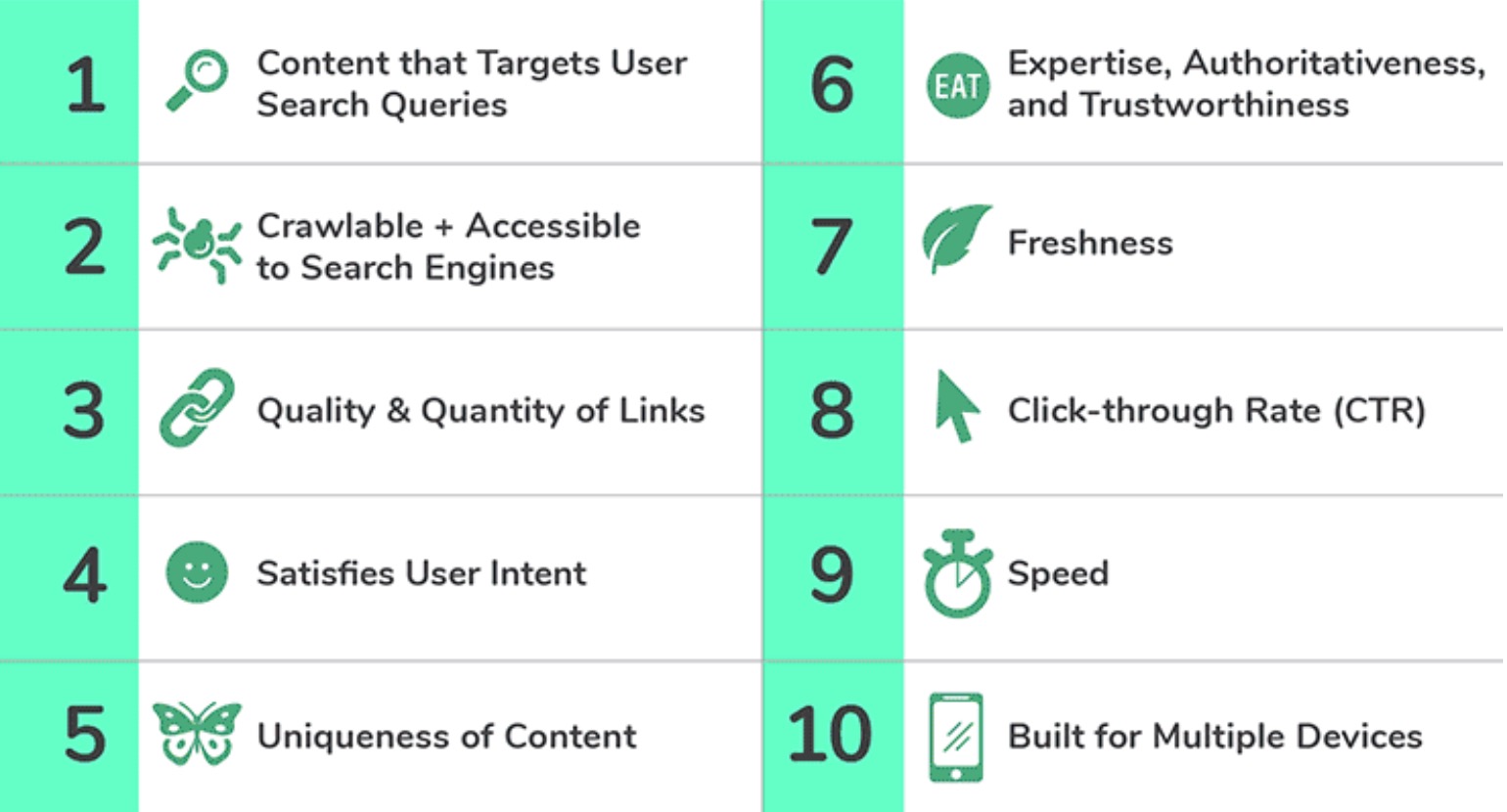

Google top ten ranking factors

Ranking factors are the criteria parameters applied by search engines when evaluating pages to determine the best order of relevant results to return for a search query.

Understanding ranking factors is critical for executing SEO. You need to be familiar with them because they help create a better user experience, which ensures more leads and conversions for your business.

Below are the ten critical Google success factors.

Well targeted content

Creating well-targeted content is crucial. You must identify what people are searching for and produce high-quality content tailored to their requirements.

Crawlable website

For high ranking, your website must be easy for search engines to crawl and locate.

Quality and quantity of links

According to Google, the link of more high-quality pages to your website, the more authority it gains.

Content oriented at user intent

Your content should be geared toward user intent and strive to offer comprehensive information.

Unique content

It is critical to creating unique content to increase your chances of ranking. Avoid using duplicate content on your website.

Expertise – Authority – Trust

Google focuses on expertise, authority, and trust to determine page quality. The ranking combines the content, its creator, and the broader website.

Fresh content

Regularly updating your content keeps it fresh, and optimizing your title tags and meta descriptions can enhance your click-through rate.

Call to action – CTA

Optimize your title tags and meta descriptions to improve the click through rate (CTR) of your webpages.

Website speed

Loading time is crucial. For each page’s second to load, you risk losing 20% of potential visitors.

Responsive website

Ensure that your website works seamlessly on any device and screen size since approximately 60% of all visits come from mobile devices such as tablets and mobile phones.

Crawling: Can search engines find your pages?

Before appearing in the SERPs, ensuring your website is crawled and indexed is essential. Start by checking how many of your web pages are in the index to determine whether Google is crawling and discovering all the relevant pages while excluding unwanted ones.

If your website doesn’t appear in the search results, there could be several reasons:

- Your website is new and has not been crawled yet.

- The navigation of your website makes it hard for a robot to crawl it effectively.

- Your website contains some basic code called “crawler directives” that is blocking search engines.

- Your website has been penalized by Google for spammy tactics.

- Your website is not linked to from any external websites.

Enable your website to be crawled by search engines

Your webpage can provide better control over the content ending up in the index by instructing Googlebot about how to crawl your website. This can be useful in cases where essential pages need to be added to the index or unimportant pages have been wrongly indexed. By directing search engines on how to crawl your site, you can ensure that only the relevant pages are indexed.

Can crawlers find all of your important content?

While a search engine may crawl some parts of your site, other pages or sections may be challenging for various reasons. It’s crucial to ensure that search engines can access and index all the content you want to be visible to users. Therefore, it’s essential to ensure the bot can crawl through your entire website, not just the homepage.

Is your content hidden behind log-in forms?

Search engines cannot view pages protected by a login requirement, form fills, or surveys. Crawlers are not designed to perform login actions.

Are you relying on search forms?

Search forms are not accessible to robots. It is a common misconception that search engines can automatically index all content searched through a search box placed on a website.

Is text hidden within non-text content?

Avoid using non-text media formats to display text you want to index. While search engines are improving their ability to recognize images, there still needs to be an assurance that they can accurately read and interpret them.

Can search engines follow your site navigation?

To help the crawler navigate your website from page to page, creating a path of links on your site is crucial. Similar to how a crawler discovers your site through links from other sites, links on your site will guide the crawler to different pages. If a page is not linked to any other page, the crawler may be unable to find it, even if you want search engines to find it.

Do you have clean information architecture?

The arrangement and categorization of content on a website to enhance user experience and discoverability is known as information architecture. Effective information architecture is user-friendly, implying that visitors should be able to navigate through the website or locate information without much effort or confusion.

Are you utilizing sitemaps?

A sitemap is a tool that helps crawlers discover and index your website’s URLs. To ensure that search engines can find your most important pages, you can create a sitemap file that conforms to Google’s standards and submit it via Google Search Console. You can refer to this link to learn more about sitemaps.